What we do

Artificial intelligence is reshaping electricity consumption patterns in modern datacenters, creating new challenges and opportunities for energy sellers, institutional energy buyers, and energy regulators. While data centers traditionally operate with a stable baseload profile, AI introduces distinctly different power requirements that must be understood for effective grid planning and long-term energy procurement strategies. In particular, the power consumption of AI training versus AI inference differs significantly, impacting both infrastructure needs and system reliability. AI training workloads drive extremely high and sustained electricity demand. Large-scalemodels rely on thousands of GPUs (Graphics Processing Units) running at near-full capacity for weeks or even months, creating a long-duration, high-intensity baseload that far exceeds typical ICT operations. Energy consumption per rack frequently surpasses 60 kW, with peak loads exceeding 100kW, which increasingly requires advanced cooling technologies such as liquid cooling. Additionally, AI training generates rapid internal electrical spikes during model checkpoints, creating short-term load volatility that must be considered in grid stability assessments and energy contracting decisions.

As aresult of these trends - and the rapid construction of hyperscale data centers - global data center electricity demand is projected to reach 945 TWh by 2030. Looking toward 2035, the International Energy Agency forecasts a range of 700 to 1,720 TWh, depending on available power generation and grid expansion. The base-case outlook anticipates global data center consumption of 1,200 TWh by 2035, underscoring the strategic importance of integrated energy planning, robust regulatory frameworks, and long-term procurement mechanisms that can support the growing electricity needs of the global digital economy.

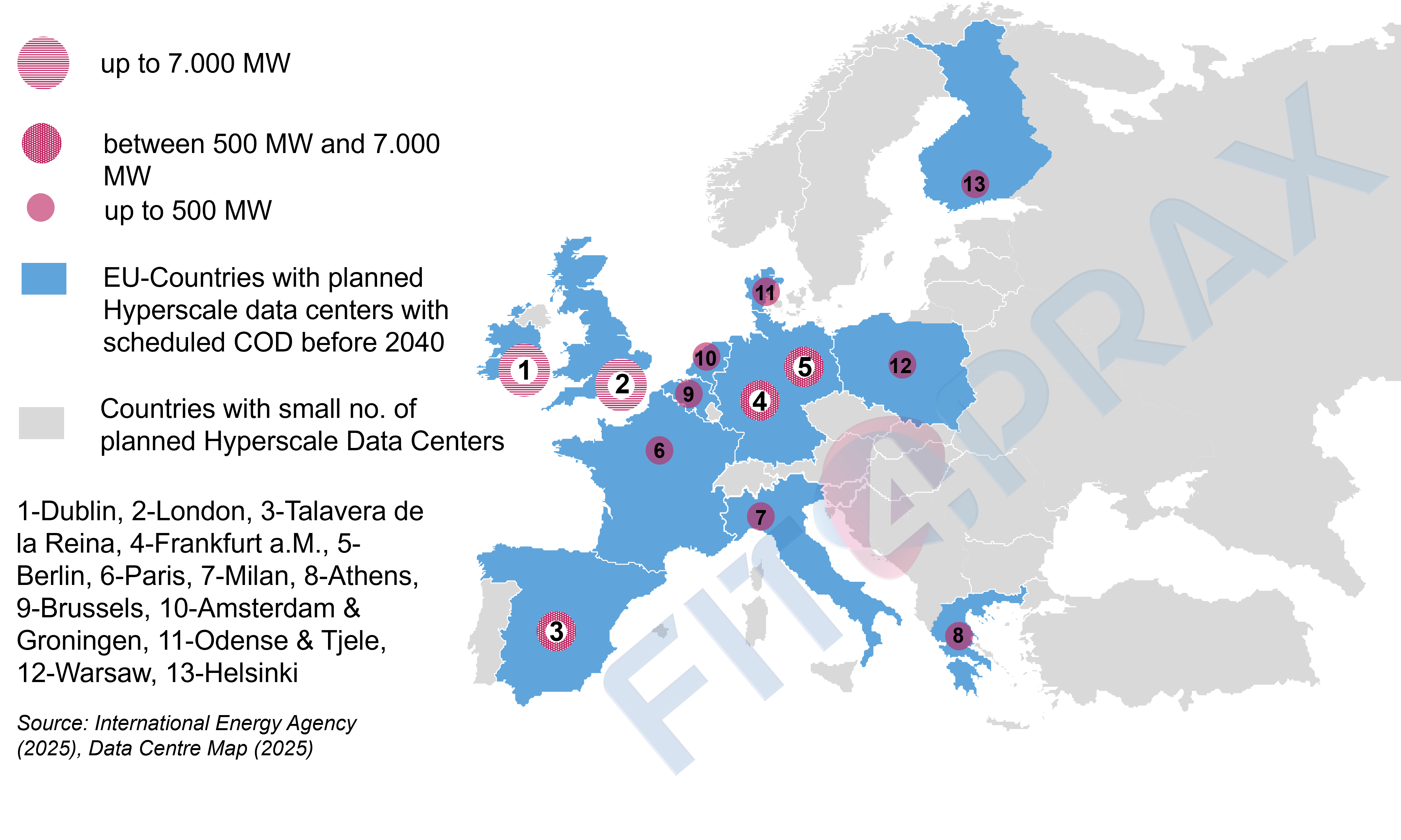

The map shown highlights the most significant European markets for planned AI data centers. However, since the traditional Tier 1 locations show vacancy rates between 4-7% p.a., also other markets like e.g. Sweden or Norway might evolve as interesting data center markets.

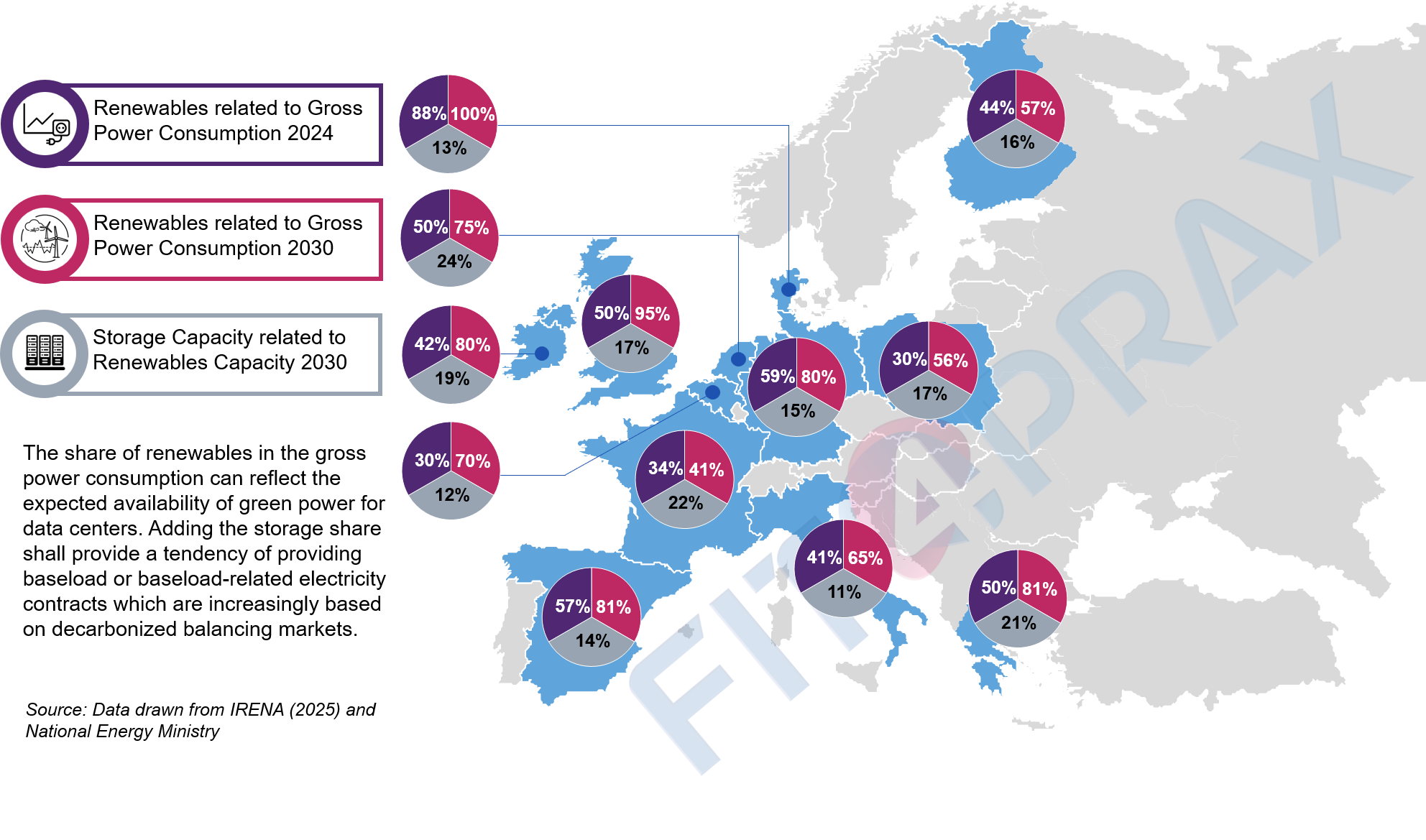

The rapid expansion of hyperscale data centers has become a key driver of the global digital economy, but it also intensifies the challenge of balancing supply reliability, decarbonization, and cost efficiency. These large-scale AI facilities require massive and continuous electricity—during both high-intensity AI training cycles and steady inference operations—placing significant pressure on local and regional grid infrastructure. At the same time, the accelerating energy transition is boosting renewable generation across major markets. Yet the shortage of scalable, operational energy storage means that variable renewable output still collides with a near-constant 24/7 load profile typical of hyperscale environments. This imbalance cannot currently be resolved at low cost.

Although Europe will play a certain role in the international hyperscaler market until 2030, its growth is significantly lower than that of the USA and China in terms of electricity consumption. In 2024, European data centers consumed approx. 70 TWh of electricity p.a. (vs. 180 TWh USA, 100 TWh China) which accounts for 16% of the global data center power consumption in 2024. It is expected that this consumption will grow up to 115 TWh in Europe until 2030. This growing demand needs to be managed in a way to optimize a triangle between “Decarbonization”, “Price Efficiency”, and “Supply Security”. For regions hosting hyperscale datacenters, this challenge is amplified. Their high power demand significantly increases the need for grid balancing and reserve capacity. When renewable energy is unavailable, balancing is frequently achieved through fossil-based peaking plants, which not only raises electricity prices but also counteracts decarbonization goals. In the USA and China, fossil fuels (especially natural gas) are often more readily available and cheaper, which simplifies the energy supply for data centers compared to the European core markets for hyperscalers while contradicting decarbonization targets. The map below shows the growth targets of renewable energy and storage solutions in current European hyperscale markets.

Beside the necessity to have renewable energy available, highly resilient and responsive balancing markets with more storage capacity are necessary to provide supply stability. Therefore, the design of balancing markets becomes increasingly critical for maintaining grid stability, cost efficiency, and investment signals.

Across Europe, balancing markets can take the form of energy-only markets, capacity markets, or a hybrid model, as seen for example in Germany and France. For large hyperscalers, energy-only market structures often offer greater efficiency because they reward real-time system flexibility and align more closely with the continuous, high-load consumption profiles of AI-driven data centers. This framework can incentivize investments in demand response, on-site generation, and flexible procurement strategies that match hyperscalers’ operational needs. At the same time, hybrid systems that combine elements of energy-only and capacity markets can also be effective, especially in regions facing structural adequacy challenges. They ensure that firm capacity remains available while still leveraging market-based signals to optimize dispatch and system balancing. Overall, while so-called Non-Market Resources (NMRs), meaning balancing energy capacity which is a pure reserve without being integrated in e.g. auctions etc., can play a stabilizing role in future power systems serving hyperscale data centers, they will not be deployed uniformly across Europe. In many cases, NMRs are geographically too distant from hyperscale clusters, which limits their ability to address local reliability constraints. Consequently, NMRs should be viewed as a complementary mechanism—valuable, but not a universal solution for hyperscale energy resilience.

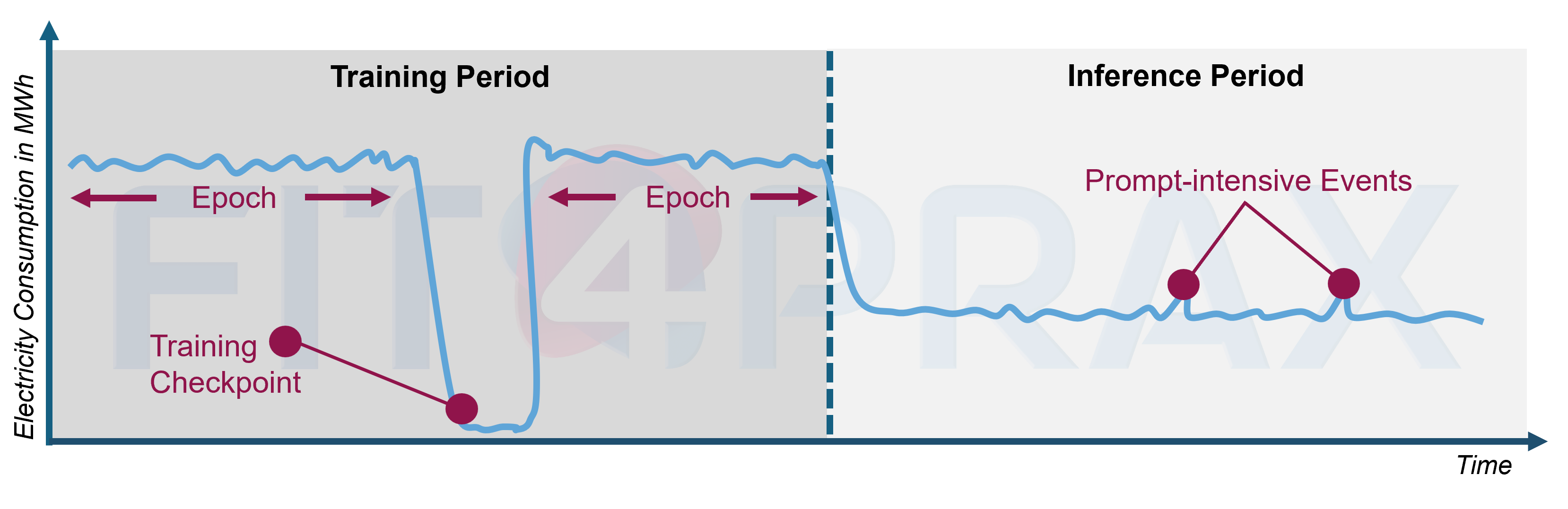

Hyperscale data centers have a unique consumption profile which is basically baseload-driven but which has also AI-specific characteristics during the training and inference (operation) period. During AI training, hyperscale data centers exhibit an exceptionally high and steady electricity demand. GPU clusters operate at near-full utilization, creating a continuous, intensive load that can last for days or weeks. However, this high load is periodically interrupted at the end of each training epoch when the system stores updated model weights. These interruptions can cause short but sharp drops in power consumption. From agrid-stability perspective, event-driven checkpointing is particularly challenging. Because these checkpoints occur dynamically—triggered by computational progress rather than on a fixed schedule—they cannot be predicted precisely. As a result, sudden reductions in load interrupt an otherwise stable high-demand profile, creating volatility that must be absorbed by the balancing area and the responsible balancing group.

During the inference or deployment phase, the overall power consumption of a hyperscale data center is lower than during training, but significantly more unpredictable. Load patterns depend heavily on user prompting behavior. Sudden increases in the number of prompts, or spikes in energy-intensive requests such as image or video generation, can create rapid and substantial load surges. These demand spikes pose a major challenge for balancing groups and grid operators, as they must maintain frequency stability despite highly dynamic and user-driven consumption. When many users simultaneously submit complex workloads, the resulting short-term power peaks can be difficult to forecast and require rapid-response reserves to stabilize the system. Not least because of these highly irregular load patterns, traditional electricity procurement through utilities reaches its operational limits.

Therefore, datacenter operators need to rely increasingly on onsite generation facilities (their own power plants) and alternative offsite procurement channels such as Power Purchase Agreements (PPAs) to secure stable and predictable supply. This is already observable in terms of PPAs since the major European offtakers are big tech firms which are already running large data center fleets, and which are committed to extensively invest in hyperscalers.

Classical power (Single Contractor) purchase agreements rely on a direct contractual relationship between an offtaker and a specific generation asset, which means they are inherently tied to aparticular technology—such as onshore wind—and to the physical performance of that project. These contracts typically define either variable deliveries based on actual production or fixed volumes to be supplied at predetermined times. For hyperscale data centers, the requirements are even more demanding: they involve exceptionally large delivery volumes that necessitate correspondingly large generation assets, and they often require fixed quantities delivered in short time intervals to match a baseload-like consumption profile. This combination can introduce a range of risks to the stability of such PPAs. Large single-asset exposure increases vulnerability to underperformance, outages, or delays in project development, while the need for firm delivery profiles can create significant imbalance risks when intermittent renewable technologies are involved. As a result, the traditional asset-specific PPA structure may struggle to provide the reliability, flexibility, and delivery precision that hyperscale operators need for their continuously expanding and highly stable load patterns.

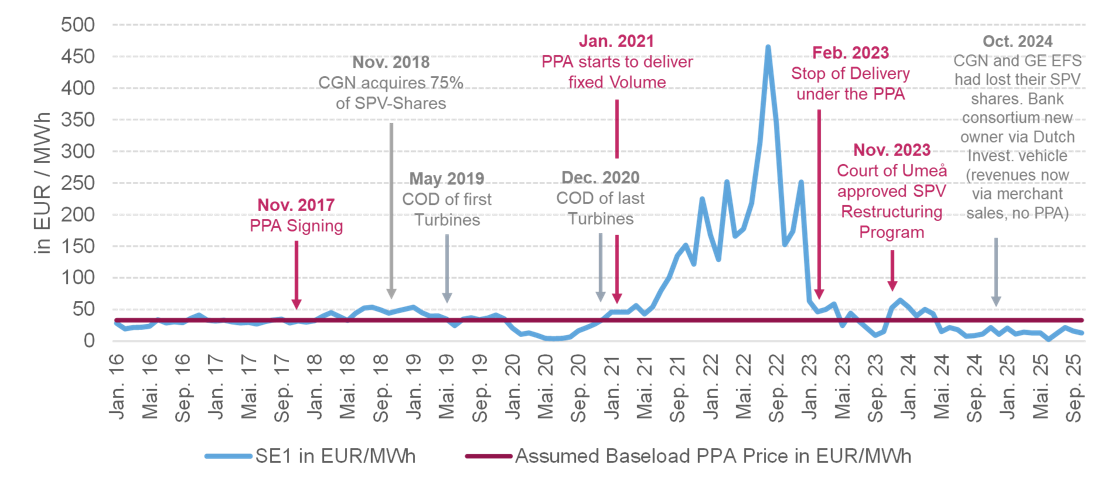

There are currently only a few significant volumes of these Single Contractor PPAs in Europe that are explicitly tied to hyperscale data centers dedicated to AI workloads. Deal characteristics are normally not publicly available. However, one of the largest and most comparable long-term PPA was struck in northern Sweden: Norsk Hydro signed a contract with the onshore wind farm Markbygden Ett AB, which is largely owned by the Chinese state-owned enterprise China General Nuclear Power Group (CGN) via its European arm. This PPA is particularly relevant for hyperscale datacenter use cases, because it combines very large volume and a baseload-like supply profile. Markbygden committed to a fixed annual delivery of 1.65 TWh for the period 2021 - 2039, while the windpark’s estimated P50 production was about 2.2 TWh p.a. That means a significant portion of the P50 (or expected) generation is under contract and must be delivered, creating a quasi-firm “green baseload” volume.

Moreover, the agreement includes not only energy but also certificates, making it structurally similar to what a hyperscaler might require: fixed volumes during small time intervals, long tenor, and a dependable profile that supports both operational stability and sustainability objectives. Thus, while this PPA was originally designed to serve industrial demand (Hydro’s smelting operations), its scale, duration, and structure make it an interesting benchmark for how a hyperscale AI-hosting data center might close a PPA today to secure large, stable green power supply over a long-term period.

In November 2017, Markbygden Ett AB signed a long-term baseload Power Purchase Agreement (PPA) with Norsk Hydro for the period 2021–2039, requiring the wind farm to deliver 1.65 TWh ofelectricity per year at a fixed price. At the time, it was one of the world’s largest corporate wind PPAs and formed a central pillar of the project’s financing, supported by several banks. In February 2023, deliveries under the PPA were fully suspended after soaring spot market prices made the purchase of replacement electricity during low-wind periods significantly more expensive than the fixed contractual price. This transaction serves as a clear example of the risks associated with large-volume baseload PPAs structured as single-counterparty agreements. When electricity prices rise significantly at the same time that a renewable energy asset is under-producing, the project operator is forced to purchase large amounts of replacement power at highmarket prices. This can severely strain—or even overwhelm—the liquidity of such infrastructure assets. In the case of Markbygden, this dynamic ultimately led to a delivery stop and a full restructuring of the wind farm in 2024. As part of the process, the original PPA was cancelled and ownership of the asset changed hands.

The case illustrates not only the importance of maintaining a degree of independence from traditional utilities, but also the necessity of diversification within self-managed procurement channels. Some market participants argue that the era of very large single-buyer PPAs is already coming to an end, replaced by a strategy of contracting multiple smaller PPAs. However, Markbygden highlights a deeper need: the development of more advanced PPA structures—particularly Hybrid PPAs that combine multiple technologies and storage assets to reduce volume risk. In addition, multi-buyer arrangements, such as consortium PPAs, may become increasingly relevant for hyperscalers seeking to manage risk more effectively while capturing economies of scale in procurement.

Well-designed PPAs can meaningfully support both price efficiency and supply reliability. Yet their success depends heavily on proper structuring, risk allocation, and contracting. With regard to decarbonization, the hyperscaler market is already moving toward 24/7 clean energy tracking, which requires future PPAs to enable precise reporting frameworks that verify—ideally on a minute-by-minute basis—that renewable electricity is being produced at the same time it is consumed (the principle of simultaneity).

Europe represents a highly attractive market for hyperscale data centers, particularly when it comes to Power Purchase Agreements (PPAs) and onsite generation, offering significant potential to optimize the three dimensions of the strategic triangle: decarbonization, cost efficiency, and supply reliability. At the same time, the enormous electricity demand of this consumer segment meets an energy market currently undergoing transformation, which poses substantial challenges for balancing markets and can significantly impact local infrastructure and price formation.

Fit4Prax specializes precisely in solving such complex challenges. We support both green energy providers and operators of energy-intensive assets, such as hyperscale data centers. For our clients, we design the optimal electricity portfolio to realize the strategic triangle. Leveraging our extensive network across international energy markets, we structure the most suitable transactions and continue to support our clients well beyond contract execution, offering support in contract management and 24/7 carbon tracking.